Angle

The Data Foundations of Responsible AI Adoption

- Information governance

- 3 mins

As AI becomes embedded in enterprise workflows, legal and compliance leaders are stepping into a new role: not just managing risk but shaping data strategy for the structure, controls, and accountability needed to responsibly adopt AI, including enterprise-wide tools such as Copilot for Microsoft 365.

This series explores how legal and compliance teams can lead the charge toward responsible AI readiness.

The Promise and the Pitfalls

AI offers a powerful new tool to streamline knowledge worker tasks like marketing strategy creation, financial budget proposals, competitive analysis, and client service case summarisation. However, the same capabilities that make AI so compelling also put sensitive data at risk of overexposure.

Prior to the widespread adoption of AI, data storage and access methods differed across companies and even functions. Users and even leadership often had limited understanding of what sensitive information was accessible and by whom, relying on a lack of awareness by larger employee populations to keep sensitive documents safe. Now, tools such as Microsoft Copilot can access and surface any data a user has permission to view. This can inadvertently reveal sensitive content (e.g., business strategy documents, privileged communications, or personally identifiable information (PII)) that was never meant to be widely shared.

Historically, access controls were designed for manual workflows. A user might have access to thousands of files, but without a search prompt or direct navigation, they’d never encounter most of them. AI changes that. It synthesises and surfaces information from across the data estate, producing new content that puts sensitive details at risk. This shift demands an intentional and cautious approach to data governance.

Understanding Your Data Estate

Before enabling AI, legal teams must take a hard look across their entire data landscape. This includes knowledge of where data is stored, awareness of access permissions, and the type of information that your organisation qualifies as ‘sensitive.’ Identifying criteria for sensitive information is a critical first step in limiting access permissions without leaving data vulnerable.

This discovery phase is the foundation for AI implementation. Not just regarding compliance with privacy laws but also understanding what data must be retained for regulatory reasons, what should be defensibly deleted, and how strictly user permissions should be altered.

The quality of your organisation’s data plays a significant role in producing effective results. The reality of leveraging AI to enhance workflows and productivity is that your results will look different when the quality of data is lacking. Many organisations spend years hoarding data, and the rise of AI is forcing a long-overdue assessment of data quality and retention.

Classifying and Protecting

Not all data is created equal, and your security controls should reflect that. Highly sensitive content should be encrypted, tracked, and monitored. Less sensitive data may require lighter controls but should still be monitored.

This is where responsible AI experts and tools like Microsoft Purview come into play. By applying trainable classifiers and sensitivity labels, organisations can automate the identification and protection of critical data. Real-time Data Loss Prevention (DLP) and insider risk management tools then act on these classifications to block risky actions, warn users, or trigger alerts.

Educate and Empower

Even the best technical controls can’t prevent every mistake. User education and training are essential to ensuring holistic compliance. Many professionals are not trained to properly leverage prompt engineering or the nuances of deploying AI. The results of one user’s well-crafted and specific prompt will vary widely from a new user vaguely prompting AI.

User training should focus on both the “how” and the “why.” Teach users how to write effective prompts but also help them understand the implications of AI content permissions. Train different functional teams separately using use cases that apply directly to their work. Lastly, remind users that AI isn’t a search engine; it’s a collaborator that requires clear instructions and critical oversight.

Build a Resilient Framework

Organisations must accept that no responsible AI framework can be perfect. Any degree of content sharing can introduce risks, such as unintended exposure of sensitive data and AI prompts and responses not working as intended. Sharing too much information can hinder implementation efforts and increase the likelihood of errors related to broad data access or prompt management. On the other hand, over-restrictive sharing schemas will grind collaboration to a halt.

A responsible AI framework acknowledges this and strikes the right balance based on the organisation’s accepted risk appetite. To allow for collaboration while also mitigating the inherent associated risks, organisations should implement systematic procedures to address data overexposure, continuously monitor AI interactions, audit outputs, and improve access controls. Utilising a “least permissive” access model, where users only have access to information relevant to their responsibilities, is highly encouraged.

Responsible AI Adoption

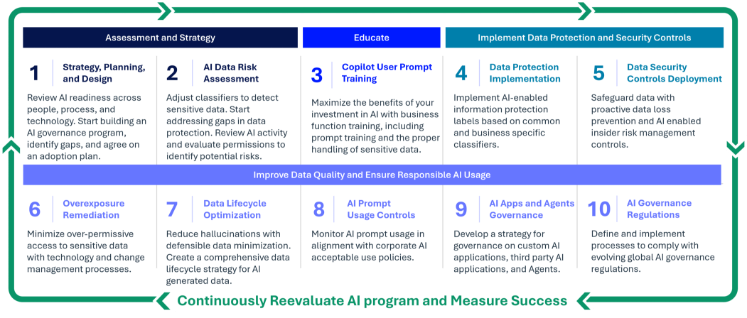

Eighteen months ago, Epiq launched its Responsible AI and Copilot Readiness offering with a vision to empower organisations to harness the full potential of AI while maintaining the highest standards of data security and compliance. The offering includes ten steps, starting with an assessment, quickly getting copilot into the hands of its users by step three, and protecting long-term against data overexposure with steps four through ten.

Since then, legal teams have seen a surge in interest surrounding AI tools that boost broader organisational productivity, improve accuracy, and unlock new ways of working. As organisations race to adopt these technologies, one truth has become clear: without a clearly defined data security strategy, the risks outweigh the rewards.

The Bottom Line

AI has the potential to transform work, but only if it’s implemented carefully and intentionally. By investing in data discovery, classification, user education, and proactive protection, organisations can unlock the benefits of AI without compromising security or compliance.

Keep an eye out for the first step in this blog series: Strategy, Planning, and Classification.

Jon Kessler, Vice President and General Manager, Information Governance, Epiq

Jon Kessler is Vice President and General Manager of Information Governance within Legal Solutions at Epiq, where he leads a global team focused on helping clients unlock the value of Microsoft Purview through Responsible AI and Copilot Readiness services. His team advises on a wide range of governance and compliance areas, including Microsoft Copilot, data privacy, insider risk, legal hold, records management, and M&A data processes. Under his leadership, the team has been recognized as Microsoft Compliance Partner of the Year as a finalist in 2022 and 2024, and winner in 2023.

Since taking on the VP and GM role, Jon has doubled the size and revenue of the Information Governance business while deepening alignment with Microsoft’s compliance ecosystem. With a background in digital forensics and eDiscovery, he has led over 600 forensic investigations, served as an expert witness in federal and state courts, and regularly trains government agencies and Fortune 500 companies on AI, compliance, and legal technology.

The contents of this article are intended to convey general information only and not to provide legal advice or opinions.