.png)

Safeguard AI Workflows With Classification-Driven Protection

- eDiscovery

- 1 min

Key Takeaway: Organizations that want secure and reliable AI must start with disciplined data classification and controls that protect both AI prompts and AI responses. Using sensitivity labels to drive Data Loss Prevention and other controls, organizations ensure that AI apps use only permitted data, and that generated responses inherit appropriate protections.

As data volumes surge and AI becomes embedded in daily workflows, organizations need clearer control of their data to avoid emerging risks, including unintended data exposure.

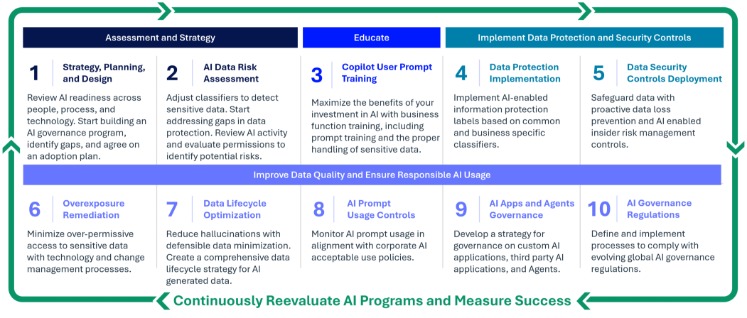

Step five of the Copilot Readiness Framework narrows in on data security controls that transform classification from a static label into active protection. With a strong classification foundation established in step four, Data Classification, organizations are now positioned to translate those sensitivity labels into real, enforceable safeguards. By carrying forward the same label-driven logic, step five shifts the focus from identifying sensitive data to actively controlling how it moves.

With this step, learn how classification becomes the engine that powers Data Loss Prevention (DLP), ensuring the rules you set in step four are consistently applied across both traditional workflows and AI interactions.

Use Classification To Drive Data Loss Prevention

With sensitivity labels and classifiers firmly in place from step four, you have the intelligence layer that consistently identifies sensitive content across the entire environment. DLP ensures that protections follow the data everywhere it moves, including within AI interactions. Traditional DLP guards data in motion across email, cloud storage, and collaboration apps.

Classification strengthens DLP by aligning enforcement to your label taxonomy, turning those labels into the rules that trigger warnings, justifications, or blocks. Because AI can interact with any data a user or agent is permitted to access, protecting that data becomes even more critical. DLP for AI applies the same logic to safeguard both the prompt a user submits and the response within AI interactions.

For example, traditional DLP might block an email with credit card numbers or require user justification. Or, if someone tries to upload a spreadsheet with Personally Identifiable Information (PII), Protected Health Information (PHI), and/or confidential business content to a personal app, DLP can stop the upload and display a policy tip that guides safer behavior.

In AI workflows, prompts labeled Confidential or Restricted can trigger DLP warnings, justification requests, or blocks. If an AI response exposes protected data, DLP can prevent copying, downloading, or sharing it, applying the same protections to AI as to traditional workflows. Now that data is consistently classified, DLP becomes the control that enforces those classifications in both traditional and AI applications.

Data Loss Prevention Deployment Best Practices

Once classification is in place, deploying DLP requires a thoughtful approach that balances protection with productivity. These best practices help teams roll out controls in a structured, low-risk way, building user awareness, aligning enforcement with real business needs, and ensuring that safeguards extend consistently across both traditional workflows and AI interactions.

Start With Visibility (Audit Mode)

In audit mode, you can turn on tracking to see what people do, what information moves and where, and identify risky patterns. This provides visibility before any enforcement begins, ensuring future policies do not disrupt productivity.

Progressive Enforcement

Progressive enforcement introduces controls in phases, so teams learn, adjust, and build user awareness before actions are blocked. It starts by informing users, then requires justification for risky behavior, and only enforces blocking once the impact is understood and validated.

Align Controls With Regulations and Business Needs

Different industries require different controls. In financial services, firms may need to identify and safeguard deal books, financial information, or client data. In healthcare, teams may need to detect PHI in prompts to unapproved AI tools and permit Copilot only when data is masked and access is limited. Aligning these controls with regulatory requirements supports compliant AI usage.

Engage Stakeholders Early

Bringing legal, compliance, security, and business leaders into the conversation early ensures that policies reflect real workflows. Their input helps design rules that align with how people work, reducing resistance and preventing users from being surprised by changes in enforcement.

Treat Policies as Living Controls

Effective AI governance is not set once. It adapts to new risks and work patterns. Teams should review violation trends, gather feedback from users, and watch for emerging AI behaviors that introduce new risk. These insights guide refinements to controls and ensure continued policy alignment.

Protect Devices With Endpoint Data Loss Prevention

Not all data starts in the cloud or stays in the cloud. Endpoint DLP brings classification‑driven safeguards to user devices, giving teams visibility and control. This includes copying AI responses to USB drives, uploading sensitive datasets to external AI tools, or printing and capturing screens of protected responses. Sensitive information stays governed across every interaction, even when employees use AI tools outside the cloud. Classification and DLP allow organizations to safely leverage AI while blocking risky AI.

Extend Protection With Insider Risk Management

While DLP safeguards sensitive data in motion, Insider Risk Management (IRM) allows organizations to detect and respond to risky behavior that may not trigger traditional policy violations but still signals elevated exposure. IRM uses indicators such as unusual data access patterns, mass downloads, attempted exfiltration, or high-risk user activity to surface emerging threats early, especially in scenarios where AI accelerates the speed and volume of data interaction.

By combining label-driven DLP with IRM insights, organizations gain a more complete view of how sensitive information flows and where potential insider risks may arise. This unified approach strengthens governance, supports compliance obligations, and ensures that controls keep pace with the fast-moving AI workplace.

Together, DLP, endpoint controls, and IRM create a layered defense that reinforces this step of the Copilot Readiness Framework — ensuring sensitive information stays protected, no matter how people or AI systems interact with it.

The Bottom Line for Secure AI Workflows

Responsible AI adoption comes down to getting the fundamentals right. Classify data consistently and let those labels drive enforcement across MIP, DLP, and broader oversight tools. This creates an environment where AI operates safely by design, not through manual controls or after-the-fact reviews.

Pursue meaningful AI innovation while staying compliant with regulatory, contractual, and ethical standards. Strengthen your ability to use AI as a transformative tool, supported by controls that keep your most sensitive information protected.

Learn more about Responsible AI and Copilot Readiness.

Manikandadevan Manokaran, Senior Data Security Consultant

As Senior Data Security Consultant at Epiq, Manikandadevan (Mani) focuses on enabling organizations to leverage Purview AI to enhance security, compliance, and data protection. Mani is a client-obsessed consultant with over 16 years of experience in Microsoft technologies. He specializes in cloud migration, security, and compliance. Mani has a strong passion for helping organizations modernize their data environments with intelligent AI solutions.

Paul Renehan, Vice President, Advisory

Paul Renehan is an executive leader with more than two decades of experience spanning data governance, information protection, and eDiscovery, with a growing focus on preparing organizations for the accelerating demands of artificial intelligence. As Vice President, Advisory, he leads a team of specialists who modernize enterprise data governance and protection strategies, ensuring information ecosystems are secure, compliant, prepared for AI, and positioned to drive business value.

The contents of this article are intended to convey general information only and not to provide legal advice or opinions.