Advice

Data Risk Assessment: Enabling AI Adoption

- 1 min

With data at the core of any highly functioning and mature business, AI tools like Microsoft Copilot and Gemini are exposing vulnerabilities in the management of sensitive information. As these technologies are embedded in daily workflows, the heightened risk for overexposure of sensitive information cannot be ignored. An AI data risk assessment acts as your first line of defense, ensuring sensitive data is protected from the start while harnessing the power of automation.

What is an AI data risk assessment?

An AI data risk assessment is a way for organizations to effectively identify and protect sensitive information while continuing to adopt AI tools like Copilot. Key components include automated data discovery, classification, access monitoring, and policy enforcement. This offers an understanding of the content within your data estate, provides information about data flows during AI interactions across platforms, and supports risk mitigation efforts.

Considering the heightened vulnerability of data stored online and accessible to AI, organizations are increasingly attuned to the risks of data leakage. Whether it’s confidential information, employee records, or proprietary business data, without proper controls, AI tools can inadvertently expose this information across various platforms through prompts, responses, or integrations with external platforms.

Minimizing data risk is about controlling sensitive information within the organization, knowing where that data is stored, and understanding who has access to it. Risk assessments should also account for third-party AI tools and integrations, providing insight into where data may flow outside the organization’s direct control. Specific examples include personal data, financial records, and regulated information such as Payment Card Industry (PCI) or Personally Identifiable Information (PII). As AI advances, many organizations juggle multiple tools at once to perform different tasks. Within each platform, data is stored and accessed, and thus vulnerable to exposure. Conducting AI data risk assessments enables organizations to anticipate areas of exposure and regulatory risk, establishing a solid framework for responsible AI deployment.

Aren’t companies already protecting sensitive data?

While many organizations have active data security protocols in place, traditional data protection tools like Data Loss Prevention (DLP) and Insider Risk Management (IRM) were designed for email, file sharing, and collaboration platforms. AI introduces new vectors for data exposure that these tools weren’t built to handle standalone. This introduces the need to monitor AI technologies regardless of whether they are within the data ecosystem or external.

Microsoft offers Data Security Posture Management for AI (DSPM for AI) as a component of Microsoft Purview. This solution can be used in conjunction with Data Loss Prevention (DLP) and Insider Risk Management (IRM) policies. It examines data estates for potential vulnerabilities and provides recommendations based on its analysis. DSPM for AI enables organizations to observe and manage data flow between AI applications and internal systems. Through integration with DLP and IRM, DSPM for AI provides unique AI monitoring capabilities and supports policy enforcement across both traditional and AI environments.

For legal teams, this means gaining visibility into how sensitive data is accessed, shared, or used in AI interactions without overhauling your existing security infrastructure.

Tailoring AI Data Risk Controls to Your Organization

Classifiers are rules or models that detect sensitive information across an organization’s data estate. DSPM for AI allows users to customize classifiers to reflect the unique risk profile of their organization while creating default policies based on analytics.

Legal and compliance departments can work with IT and security teams to adjust classifiers based on regulatory obligations, confidentiality requirements, or internal risk avoidance policies. You can edit existing information types, create custom classifiers, and apply them to your DSPM policy, all within a centralized portal.

This flexibility ensures that AI risk controls are not one-size-fits-all. Instead, they evolve with each organization’s needs and legal and regulatory obligations.

Using Microsoft Built-In Recommendations for Classifiers

For organizations unsure where to begin, Microsoft offers automated classifier recommendations based on real-time traffic analysis. These recommendations help identify high-risk behaviors such as uploading sensitive data to AI sites or submitting prompts that could expose confidential information.

The ability to stack tools like DSPM for AI with other Microsoft offerings like DLP allows users to operate efficient workflows in a unified system while ensuring data security.

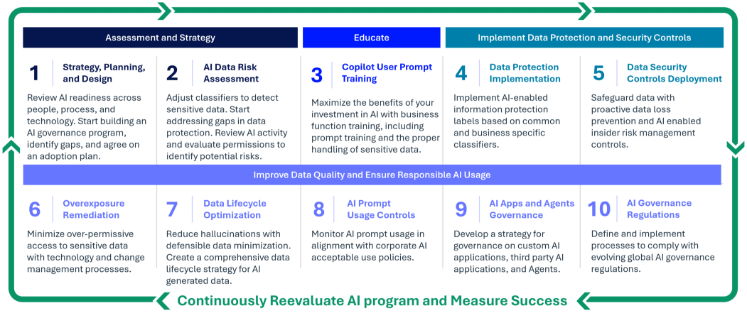

AI Governance Framework: Craw, Walk, Run

Organizations can utilize a “crawl, walk, run” model to roll out new policies with stakeholders. The process includes:

- Crawl: Deploy policies in audit mode to observe behavior without enforcement.

- Walk: Monitor and alert stakeholders when they have violated a new policy, but do not block the behavior.

- Run: Enforce policies to block risky actions and ensure compliance.

This phased approach allows organizations to balance security with usability, avoiding disruption while building a strategic AI governance framework. Each phase should be supported by metrics such as policy violation rates, user adoption, and incident response times to guide progression and ensure accountability. The framework should be adaptable based on risk profiles. High-risk use cases may require faster progression to enforcement, while low-risk areas may remain in audit mode longer.

What Legal and Compliance Teams Gain From a Risk Assessment

Completing an AI data risk assessment through DSPM for AI offers several benefits that help to proactively address AI governance. It allows for rapid deployment with minimal configuration, prevents data leakage in AI prompts and responses, monitors unethical behavior, and provides support for regulatory compliance with frameworks like GDPR, HIPAA, NIST, and RMF. DSPM for AI also supports policy enforcement by enabling automated governance controls and audit trails for AI interactions. It provides real-time monitoring and alerting capabilities that help teams respond swiftly to potential data misuse or policy violations and fosters collaboration between legal, risk, compliance, security, and data teams to ensure unified governance across AI initiatives. These capabilities ensure that innovation doesn’t come at the expense of compliance or confidentiality.

Protecting Data When Using Third-Party AI Tools

While Copilot is fully supported within Purview natively, coverage for third-party AI tools can be governed using connectors or by integrating the Purview SDK into custom developed AI apps. Microsoft is actively working to extend visibility and control to additional platforms, offering organizations a more comprehensive view of the risks that come with AI implementation, such as inadvertent disclosure of sensitive information, lack of audit trails, or non-compliance with jurisdictional data privacy laws. This visibility gap leaves organizations exposed to risks that fall outside of Microsoft’s governance framework. To close this gap, legal and IT teams should consider complementary solutions that extend monitoring and policy enforcement across all AI in use. A forward-looking approach enables organizations to stay ahead of emerging risks as these tools continue to develop. Legal teams should proactively engage with IT and governance stakeholders to define acceptable use policies and implement monitoring tools that extend beyond the Microsoft ecosystems.

With Great Risk Comes Great Responsibility

AI data risk assessment isn’t just an IT concern; it’s a legal and compliance priority. By integrating tools like DSPM for AI into your governance strategy, organizations protect sensitive data, ensure regulatory compliance, and support responsible AI adoption. Before jumping headfirst into your AI adoption, a sound data governance framework will serve as a strong foundation for moving forward with confidence.

Manikandadevan Manokaran, Senior Data Security Consultant, Epiq

Manikandadevan is a customer-obsessed consultant with 16+ years of experience in Microsoft technologies. He specializes in cloud migration, security, and compliance. Manikandadevan has a strong passion for helping organizations modernize their data environments with intelligent AI solutions.

The contents of this article are intended to convey general information only and not to provide legal advice or opinions.